This past weekend I participated in a Health 2.0 developer challenge in Boston. The event was a one day hack-a-thon hosted by Health 2.0 and O’Reilly at a Microsoft building in Cambridge. I was invited to give a brief presentation about using the Kinect for motion tracking and then to help out any groups that wanted to use the Kinect in their project. At the end of the day, the projects were judged by a panel of experts and a group was named the winner.

More about the competition in a minute, but first here were the slides from my presentation:

I concluded the presentation by demonstrating the skeleton tracking application I blogged about recently.

After the presentations, the participants self-assembled into groups. One of the groups was talking about trying to build an early warning system for seizures. They asked me to talk to them about using the Kinect in their project. Unfortunately, the application seemed difficult to impossible with the Kinect. If you pointed a Kinect at a person at their desk all day long, how would you differentiate a seizure from a routine event like them dropping their pen and suddenly bending down to pick it up.

We had a brief discussion about the limitations and capabilities of the Kinect and they pondered the possibilities. After some brainstorming, the group ended up talking to a fellow conference participant who is doctoral candidate in psychiatry and works in a clinic, Dan Karlin. Dan explained to us that there are neuromuscular diseases that lead to involuntary muscle motion. These diseases include Parkinson’s but also many which occur as the side effects from psychiatric drugs.

Dan told us about a standard test for evaluating these kinds of involuntary motion: the Abnormal Involuntary Motion Scale (AIMS). To conduct an AIMS exam, the patient is instructed to sit down with his hands between his knees and stay as still as possible. The doctor then observes the patient’s involuntary motions. The amount and type of motion (particularly whether or not it is symmetrical) can then act as a tracking indicator for the progression of various neuromuscular disorders.

In practice, this test is underperformed. For many patients on psychiatric drugs, their doctors are supposed to perform the test at every meeting , but many doctors do not do so and even for those who do, the normal long delays between patient visits makes the data gathered from these tests of limited utility.

Doctor Dan (as we’d begun calling him by this point) said that an automated test that the patient could perform at home would be a great improvement to doctors’ abilities to track the progression of these kinds of diseases, potentially leading to better drug choices and the avoidance of advanced permanent debilitating side effects.

On hearing this idea, I thought it would make a perfect application for the Kinect. The AIMS test involves a patient sitting in a known posture. Scoring it only requires tracking the movement of four joints in space (the knees and the hands). The basic idea is extremely simple: add up the amount of total motion those four joints make in all three dimensions and then score that total against a pre-computed expectation or a weighted-average of the patients’ prior scores. To me, having already gotten started with the Kinect skeleton tracking data, it seemed like a perfect amount of work to achieve in a single day: record the movement of the four joints over a fixed amount of time, display the results.

So we set out to build it. I joined the team and we split into two groups. Johnny Hujol and I worked on the Processing sketch, Claus Becker and Greg Kust worked on the presentation, and Doctor Dan consulted with both teams.

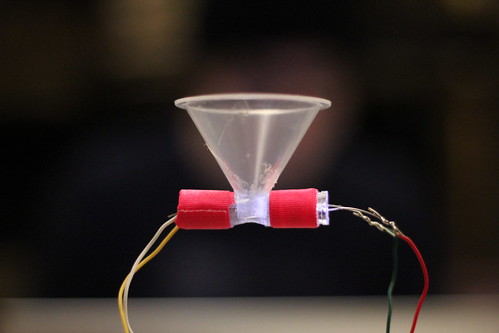

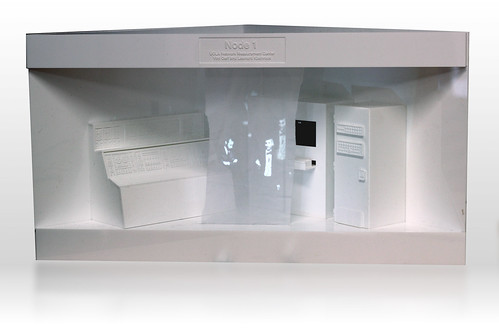

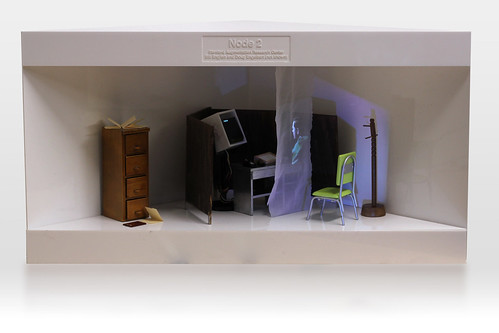

A few hours later and we had a working prototype. Our app tracked the joints for ten seconds, displayed a score to the user along with a progress bar, and graded the score red, yellow, or green, based on constant values determined in testing by Doctor Dan.

Here’s a look at the app running with me demonstrating the amount of motion necessary to achieve a yellow score:

Here’s an example of a more dramatic tremor, which pushes the score into red:

And a “normal” or steady example is here: Triangle Tremor Assesment Score Green

The UI is obviously very primitive, but the application was enough to prove the soundness of the idea of using the Kinect to automate this kind of psychiatric test. You can see the code for this app here: tremor_assessment.pde.

Here’s the presentation we gave at the end of the day for the judges. It explains the medical details a lot better than I have in this post

And, lo and behold, we won the competition! Our group will continue to develop the app going forward and will travel to San Diego in March to present at Health 2.0’s Spring conference.

I found this whole experience to be extremely inspiring. I met enthusiastic domain experts who knew about an important problem I’d never heard of that was a great match for a new technology I’d just learned how to use. They knew just how to take what the technology could do and shape it into something that could really make a difference. They have energy and enthusiasm for advancing the project and making it real.

For my part, I was able to use the new skills I’ve acquired at ITP to shape the design goals of the project into something that could be achieved with the resources available. I was able to quickly iterate from a barely-there prototype to something that really addressed the problem at hand with very few detours into unnecessary technical dead ends. I was able to work closely with a group of people with radically different levels of technical expertise but deep experience in their own domains in order to build something together that none of us could have made alone.

I’ll post more details about the project as it progresses and as we prepare for Health 2.0.