How can you make a 3d scan using sound? That’s the question that Eric Mika, Jeff Howard, Zeven Rodriguez, Molmol, and I set out to answer this week. Challenged by our 3D Scanning and Visualization class to invent our own scanning method, we decided to take the hard road: scanning using sound generated by physically hitting the object to be scanned. Obviously not a very practical approach, we liked the idea of a scanning method that’s something like physical sonar, involving actually touching the object in order to determine its shape.

We quickly sketched a plan to drop BBs from a known height onto an object. We’d drop individual pellets one at a time from known x-y positions in a grid. We’d detect the time of the BB’s fall using a photo interrupter and we’d use the sound of impact to detect the time the BB contacted the object. Eric came up with the brilliant idea of sending the photo interrupter signal as sound as well in order to get the sample rate up high enough to get reasonable accuracy and to make the hardware dead simple.

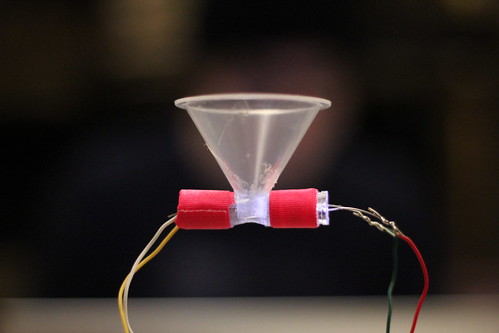

We got to building. We started off by building the interrupter.

We attached a bright LED and a photo resistor to opposite sides of a clear funnel. Then we connected the photo resistor to a power supply and a fixed comparative resistor along with a tap to send the output to the line-in of an audio mixer. The result was a tone that would drop radically in volume when something got between the LED and the resistor. We also hooked up a microphone to the same mixer and panned the two signals left and right. We then whipped up an Open Frameworks app to read the audio samples and separate them out. Now peak detection on each channel would tell us when the BB left the funnel and when it hit the target.

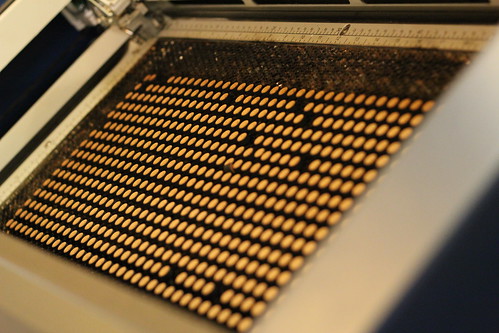

Simultaneously we got the laser started on cutting hundred of 1/4 inch circles out of a masonite board in a grid. These would act as x-y positioning holes, allowing us to render the corresponding depth measurements extracted from the sound apparatus into voxels. We conducted some basic tests dropping balls into plastic buckets and getting readings that seemed to be proportional with the distance of the fall.

Once we had these two things completed we were ready to conduct our first real test. We needed a small simple object to scan that wouldn’t require the use of hundreds of points to cover but still would reveal some kind of 3D shape. After some discussion, we decided to choose a simple bell. In addition to meeting our other criteria we thought the bell might make a nice dinging sound when pelted with BBs.

So, we got in position. Eric sat behind the Open Frameworks sketch and moved the cursor around the x-y grid, square by square, calling out positions to Jeff who matched them on the masonite board and dropped BBs. I was on camera and BB pickup duty. We recorded the resulting data into a CSV file and then brought it into Processing for visualization.

This image looks almost nothing like the round bell we scanned. First of all there are outliers. Large points separated from the main body of points that are clearly unrelated, others that spike wildly high above the main cluster, probably resulting from failure to pick up the impact correctly with the microphone. Second of all, the visualization actually represents the distance between the x-y grid and the bell rather than the shape of the bell itself. We’d initially recorded the fall times themselves as data rather than converting them into distance and subtracting them from a known height in order to match the shape of the object in question.

Now that the apparatus and process were working (albeit shakily), we agreed to make a second scan. We reconvened a couple of days later and chose a new object: a sewing machine.

We also affixed the masonite grid to a two-foot tall frame and built a base that would catch the flying BBs. For this scan we also committed to recording many more points, a 16 by 40 grid or 640 total points.

After a long period of ball dropping and measurement recording, a scan emerged. And, lo and behold, it looked actually something like a sewing machine.

Here’s a video of the whole process that shows the physical apparatus, the software interface we (mainly Eric) built in Open Frameworks showing the sound inputs as well as the incoming depth measurements, and an interactive browse of the final model (cleaned up and rendered in Processing).

And here’s a video of just the final model by itself, the curve of the top portion of the sewing machine pretty much clearly visible, kind of:

Sound Scan: 3D scanner using falling BBs and sound from Greg Borenstein on Vimeo.

While this scan may not be of the most useful quality, the process of building the hardware and software taught us a lot about the challenges that must be present in any 3D scanning process: the difficulty of calibration, eliminating outliers, scaling and processing the data, displaying it visually in a useful manner, etc.

Awesome !!