In the last few years, new media professors Ian Bogost (Georgia Tech) and Nick Montfort (MIT) have set out to advance a new approach to the study of computing. Bogost and Montfort call this approach Platform Studies:

“Platform Studies investigates the relationships between the hardware and software design of computing systems and the creative works produced on those systems.”

The goal of Platform Studies is to close the distance between the thirty thousand foot view of cultural studies and the ant’s eye view of much existing computer history. Scholars from a cultural studies background tend to stay remote from the technical details of computing systems while much computer history tends to get lost in those details, missing the wider interpretative opportunities.

Bogost and Montfort want to launch an approach that’s based “in being technically rigorous and in deeply investigating computing systems in their interactions with creativity, expression, and culture.” They demonstrated this approach themselves with the kickoff book in the Platform Studies series for MIT Press:

Racing the Beam: The Atari Video Computer System. That book starts by introducing the hardware design of the Atari and how it evolved in relationship to the available options at the time. They then construct a comprehensive description of the affordances that this system provided to game designers. The rest of the book is a history of the VCS platform told through a series of close analyses of games and how their creators co-evolved the games’ cultural footprints with their understanding of how to work with, around, and through the Atari’s technical affordances.

Bogost and Montfort have put out the call for additional books in the Platform Studies series. Their topic wish list includes a wide variety of platforms from Unix to the Game Boy to the iPhone. In this post, I would like to propose an addition to this list: OpengGL. In addition to arguing for OpenGL as an important candidate for inclusion in the series, I would also like to present a sketch for what a Platform Studies approach to OpenGL might look like.

According to Wikipedia, OpenGL “is a standard specification defining a cross-language, cross-platform API for writing applications that produce 2D and 3D computer graphics.” This dry description belies the fact that OpenGL has been at the center of the evolution of computer graphics for more than 20 years. It has been the venue for a series of negotiations that have redefined visuality for the digital age.

In the introduction to his seminal study, Techniques of the Observer: On Vision and Modernity in the 19th Century, Jonathan Crary describes the introduction of computer graphics as “a transformation in the nature of visuality probably more profound than the break that separates medieval imagery from Renaissance perspective”. Crary’s study itself tells the story of the transformation of vision enacted by 19th century visual technology and practices. However, he recognized that, as he was writing in the early 1990s, yet another equally significant remodeling of vision was underway towards the “fabricated visual spaces” of computer graphics. Crary described this change as “a sweeping reconfiguration of relations between an observing subject and modes of representation that effectively nullifies most of the culturally established meanings of the term observer and representation.”

I propose that the framework Crary laid out in his analysis of the emergence of modern visual culture can act as a guide in understanding this more recent digital turn. In this proposal, I will summarize Crary’s analysis of the emergence of modern visual culture and try to posit an analogous description of the contemporary digital visual regime of which OpenGL is the foundation. In doing so, I will constantly seek to point out how such a description could be supported by close analysis of OpenGL as a computing platform and to answer the two core questions that Crary poses of any transformation of vision: “What forms or modes are being left behind?” and “What are the elements of continuity that link contemporary imagery with older organizations of the visual?” Due to the nature of OpenGL, this analysis will constantly take technical, visual, and social forms.

As a platform, OpenGL has played stage to two stories that are quintessential to the development of much 21st century computing. It has been the site of a process of industry standardization and it represents an attempt to model the real world in a computational environment. Under close scrutiny, both of these stories reveal themselves to be tales of negotiation between multiple parties and along multiple axes. These stories are enacted on top of OpenGL as what Crary calls the “social surface” that drives changes in vision:

“Whether perception or vision actually change is irrelevant, for they have no autonomous history. What changes are the plural forces and rules composing the field in which perception occurs. And what determines vision at any given historical moment is not some deep structure, economic base, or world view, but rather the functioning of a collective assemblage of disparate parts on a single social surface.”

As the Wikipedia entry emphasized, OpenGL is a platform for industry standardization. It arose out of the late 80s and early 90s when a series of competing companies (notably Silicon Graphics, Sun Microsystems, Hewlett-Packard, and IBM) each brought incompatible 3D hardware systems to market. Each of these systems were accompanied by their own disparate graphics programming APIs that took advantage of the various hardware systems’ different capabilities. Out of a series of competitive stratagems and developments, OpenGL emerged as a standard, backed by Silicon Graphics, the market leader.

The history of its creation and governance was a process of negotiating both these market convolutions and the increasing interdependence of these graphics programming APIs with the hardware on which they executed. An understanding of the forces at play in this history is necessary to comprehend the current compromises represented by OpenGL today and how they shape the contemporary hardware and software industries. Further OpenGL is not a static complete system, but rather is undergoing continuous development and evolution. A comprehensive account of this history would represent the backstory that shapes these developments and help the reader understand the tensions and politics that structure the current discourse about how OpenGL should change in the future, a topic I will return to at the end of this proposal.

The OpenGL software API co-evolved with the specialized graphics hardware that computer vendors introduced to execute it efficiently. These Graphical Processing Units (GPUs) were added to computers to make common graphical programming tasks faster as part of the competition between hardware vendors. In the process the vendors built assumptions and concepts from OpenGL into these specialized graphics cards in order to improve the performance of OpenGL-based applications on their systems. And, simultaneously, the constraints and affordances of this new graphics hardware influenced the development of new OpenGL APIs and software capabilities. Through this process, the GPU evolved to be highly distinct from the existing Central Processing Units (CPUs) on which all modern computing had previously taken place. The GPU became highly tailored to the parallel processing of large matrices of floating point numbers. This is the fundamental computing technique underlying high-level GPU features such as texture mapping, rendering, and coordinate transformations. As GPUs became more performant and added more features they became more and more important to OpenGL programming and the boundary where execution moves between the CPU and the GPU became one of the central features in the OpenGL programming model.

OpenGL is a kind of pidgin language built up between programmers and the computer. It negotiates between the programmers’ mental model of physical space and visuality and the data structures and functional operations which the graphics hardware is tuned to work with. In the course of its evolution it has shaped and transformed both sides of this negotiation. I have pointed to some ways in which computer hardware evolved in the course of OpenGL’s development, but what about the other side of the negotiation? What about cultural representations of space and visuality? In order to answer these questions I need to both articulate the regime of space and vision embedded in OpenGL’s programming model and also to situate that regime in a historical context, to contrast it with earlier modes of visuality. In order to achieve these goals, I’ll begin by summarizing Crary’s account of the emergence of modern visual culture in the 19th century. I believe this account will both provide historical background as well as a vocabulary for describing the OpenGL vision regime itself.

In Techniques of the Observer, Crary describes the transition between the Renaissance regime of vision and the modern one by contrasting the camera obscura with the stereograph. In the Renaissance, Crary argues, the camera obscura was both an actual technical apparatus and a model for “how observation leads to truthful inferences about the world”. By entering into its “chamber”, the camera obscura allowed a viewer to separate himself from the world and view it objectively and completely. But, simultaneously, the flat image formed by the camera obscura was finite and comprehensible. This relation was made possible by the Renaissance regime of “geometrical optics”, where space obeyed well-known rigid rules. By employing these rules, the camera obscura could become, in Crary’s words, an “objective ground of visual truth”, a canvas on which perfect images of the world would necessarily form in obeisance to the universal rules of geometry.

In contrast to this Renaissance mode of vision, the stereograph represented a radically different modern visuality. Unlike the camera obscura’s “geometrical optics”, the stereograph and its fellow 19th century optical devices, were designed to take advantage of the “physiological optics” of the human eye and vision system. Instead of situating their image objectively in a rule-based world, they constructed illusions using eccentricities of the human sensorium itself. Techniques like persistence of vision and stereography manipulate the biology of the human perception system to create an image that only exists within the individual viewer’s eye. For Crary, this change moves visuality from the “objective ground” of the camera obscura to posses a new “mobility and exchangability” within the 19th century individual. Being located within the body, this regime also made vision regulatable and governable by the manipulation and control of that body and Crary spends a significant portion of Techniques of the Observer teasing out the political implications of this change.

But what of the contemporary digital mode of vision? If interactive computer graphics built with OpenGL are the contemporary equivalent of the Renaissance camera obscura or 19th century stereography, what mode of vision do they embody?

OpenGL enacts a simulation of the rational Renaissance perspective within the virtual environment of the computer. The process of producing an image with OpenGL involves generating a mathematical description of the full three dimensional world that you want to depict and then rendering that world into a single image. OpenGL contains within itself both the camera obscura, its image, and the world outside its walls. OpenGL programmers begin by describing objects in the world using geometric terms such as points and shapes in space. They then apply transformations and scaling to this geometry in absolute and relative spatial coordinates. They proceed to annotate these shapes with color, texture, and lighting information. They describe the position of a virtual camera within the three dimensional scene to capture it into a two dimensional image. And finally they animate all of these properties and make them responsive to user interaction.

To extend Crary’s history, where the camera obscura embodied a “geometric optics” and the stereograph a “physiological optics”, OpenGL employs a “symbolic optics”. It produces a rule-based simulation of the Renaissance geometric world, but leaves that simulation inside the virtual realm of the computer, keeping it as matrices of vertices on the GPU rather than presuming it to be the world itself. OpenGL acknowledges its system is a simulation, but we undergo a process of “suspension of simulation” to operate within its rules (both as programmers and as users of games, etc. built on the system). According to Crary, modern vision “encompasses an autonomous perception severed from any system”. OpenGL embodies the Renaissance system and imbues it with new authority. It builds this system’s metaphors and logics into its frameworks. We agree to this suspension because the system enforces the rules of a Renaissance camera obscura-style objective world, but one that is fungible and controllable.

The Matrix is the perfect metaphor for this “symbolic optics”. In addition to being a popular metaphor of a reconfigurable reality that exists virtually within a computer, the matrix is actually the core symbolic representation within OpenGL. OpenGL transmutes our description of objects and their properties into a series of matrices whose values can then be manipulated according to the rules of the simulation. Since OpenGL’s programming models embeds the “geometric optics” of the Renaissance within it, this simulation is not infinitely fungible. It posses a grain towards a set of “realistic” representational results and attempting to go against that grain requires working outside the system’s assumptions. However, the recent history of OpenGL has seen an evolution towards making its system itself programmable, loosening these restrictions by providing programmers ability to reprogram parts of its default pipeline themselves in the form of “shaders”. I’ll return to this topic in more detail at the end of this proposal.

To illustrate these “symbolic optics”, I would conduct a close analysis of various components of the OpenGL programming model in order to examine how they embed Renaissance-style “geometric optics” within OpenGL’s “fabricated visual spaces”. For example, OpenGL’s lighting model with its distinction between ambient, diffuse, and specular forms of light and material properties would bear close analysis. Similarly, I’d look closely at OpenGL’s various mechanisms for representing perspective, from the depth buffer,to its various blending modes and fog implementation. Both of these topics, light and distance, have a rich literature in the history of visuality that would make for a powerful launching point for this analysis of OpenGL.

To conclude this proposal, I want to discuss two topics that look forward to how OpenGL will change in the future both in terms of its ever-widening cultural application and the immediate roadmap for the evolution of the core platform.

Recently, Matt Jones of British design firm Berg London and James Bridle of the Really Interesting Group, have been tracking an aesthetic movement that they’ve been struggling to describe. In his post introducing the idea, The New Aesthetic, Bridle describes this as a “a new aesthetic of the future” based on seeing “the technologies we actually have with a new wonder”. In his piece, Sensor-Vernacular, Jones describes it as “an aesthetic born of the grain of seeing/computation. Of computer-vision, of 3d-printing; of optimised, algorithmic sensor sweeps and compression artefacts. Of LIDAR and laser-speckle. Of the gaze of another nature on ours.”

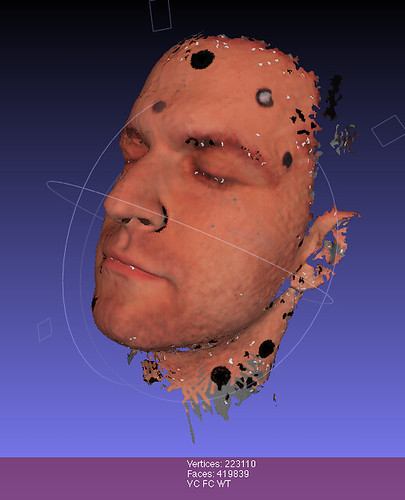

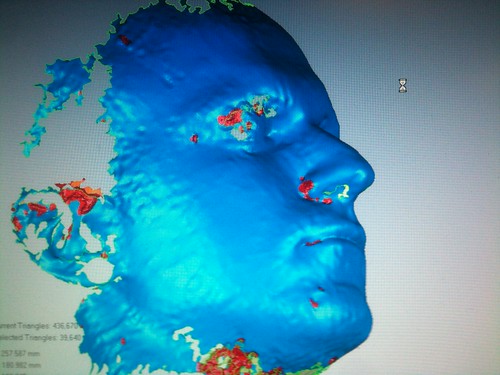

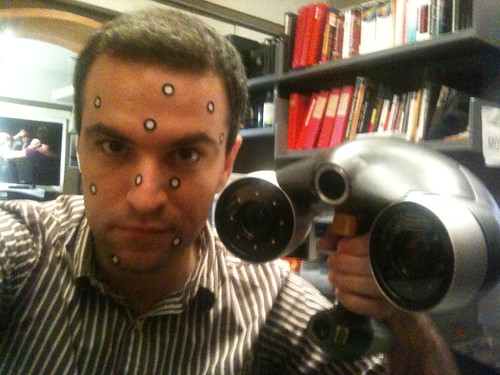

What both Jones and Bridle are describing is the introduction of a “photographic” trace of the non-digital world into the matrix space of computer graphics. Where previously the geometry represented by OpenGL’s “symbolic optics” was entirely specified by designers and programmers working within its explicit affordances, the invention of 3D scanners and sensors allows for the introduction of geometry that is derived “directly” from the world. The result is imagery that feel made up of OpenGL’s symbols (they are clearly textured three dimensional meshes with lighting) but in a configuration different from what human authors have previously made with these symbols. However these images also feel dramatically distinct from traditional photographic representation as the translation to OpenGL’s symbolic optics is not transparent, but instead reconfigures the image along lines recognizable from games, simulations, special effects, and the other cultural objects previously produced on the OpenGL platform. The “photography effect” that witnessed the transition from the Renaissance mode of vision to the modern becomes a “Kinect effect”.

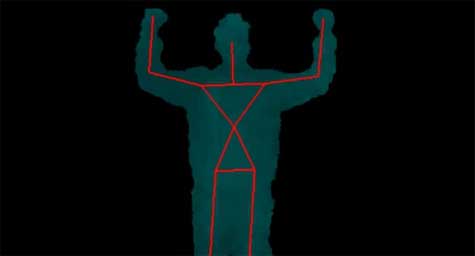

A full-length platform studies account of OpenGL should include analyses of some of these Sensor-Vernacular images. A particularly good candidate subject for this would be Robert Hodgin’s Body Dysmophic Disorder, a realtime software video project that used the Kinect’s depth image to distort the artist’s own body. Hodgin has discussed the technical implementation of the project in depth and has even put much of the source code for the project online.

Finally, I want to discuss the most recent set of changes to OpenGL as a platform in order to position them within the framework I’ve established here and sketch some ideas of what issues might be in play as they develop.

Much of the OpenGL system as I have referred to it here assumes the use of the “fixed-function pipeline”. The fixed-function pipeline represents the default way in which OpenGL transforms user-specified three dimensional geometry into pixel-based two dimensional images. Until recently, in fact, the fixed-function pipeline was the only rendering route available within OpenGL. However, around 2004, with the introduction of the OpenGL 2.0 specification, OpenGL began to make parts of the rendering pipeline itself programmable. Instead of simply abiding by the logic of simulation embedded in the fixed-function pipeline, programmers began to be able to write special programs, called “shaders”, that manipulated the GPU directly. These programs provided major performance improvements, dramatically widened the range of visual effects that could be achieved, and placed programmers in more direct contact with the highly parallel matrix-oriented architecture of the GPU.

Since their introduction, shaders have gradually transitioned from the edge of the OpenGL universe to its center. New types of shaders, such as geometry and tessellation shaders, have been added that allow programmers to manipulate not just superficial features of the image’s final appearance but to control how the system generates the geometry itself. Further in the most recent versions of the OpenGL standard (versions 4.0 and 4.1) the procedural, non-shader approach, has been removed entirely.

How will this change alter OpenGL’s “symbolic optics”? Will the move towards shaders remove the limits of the fixed-function pipeline that enforced OpenGL’s rule-based simulation logic or will that logic be re-inscribed in this new programming model? Either way how will the move to shaders alter the affordances and restrictions of the OpenGL platform?

To answer these questions, a platform studies approach to OpenGL would have to include an analysis of the shader programming model, how it provides different aesthetic opportunities than the procedural model, how those differences have shaped the work made with OpenGL as well as the programming culture around it. Further, this analysis, which began with a discussion of standards when looking at the emergence of OpenGL would have to return to that topic when looking at the platform’s present prospects and conditions in order to explain how the shader model became central to the OpenGL spec and what that means for the future of the platform as a whole.

That concludes my proposal for a platform studies approach to OpenGL. I’d be curious to hear from people more experienced in both OpenGL and Platform Studies as to what they think of this approach. And if anyone wants to collaborate in taking on this project, I’d be glad to discuss it.