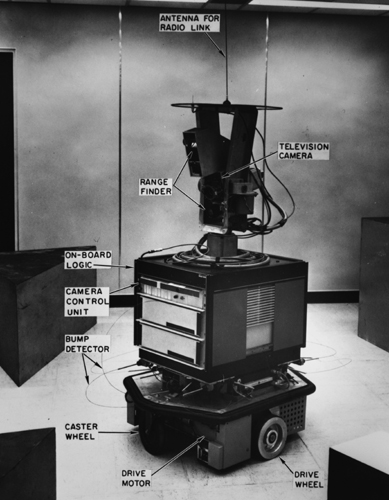

Shaky (1966-1972), Stanford Research Institute’s mobile AI platform, and the Google Street View car. The project of Artificial Intelligence has undergone a radical unbundling. Many of its sub-disciplines such as computer vision, machine learning, and natural language processing have become real technologies that permeate our world. However the overall metaphor of an artificial human-like intelligence has failed. We are currently struggling to replace that metaphor with new ways of understanding these technologies as they are actually deployed.

At the end of the 1966 spring term, Seymour Papert, a professor in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) initiated the Summer Vision Project, “an attempt to use our summer workers effectively in the construction of a significant part of a visual system” for computers. The problems Papert expected his students to overcome included “pattern recognition”, “figure-ground analysis”, “region description”, and “object identification”.

Papert had assigned a group of graduate students the task of solving computer vision as a summer homework assignment. He thought computer vision would make a good summer project because, unlike many other problems in the field of AI, “it can be segmented into sub-problems which allow individuals to work independently”. In other words, unlike “general intelligence”, “machine creativity”, and the other high-level problems in the AI program, computer vision seemed tractable.

Thirty five years later computer vision is a major sub-discipline of computer science with dozens of journals, hundreds of active researchers, and thousands of published papers. It’s a field that’s made substantial breakthroughs, particularly in the last few years. Many of its results are actively deployed in products you encounter every day, from Facebook’s face tagging to the Microsoft Kinect. But I doubt any of today’s researchers would call any of the problems Papert set for his grad students ‘solved’.

Papert and his graduate students were part of an Artificial Intelligence group within CSAIL lead by John McCarthy and Marvin Minsky. McCarthy defined the group’s mission as “getting a computer to do things which, when done by people, are said to involve intelligence”. In practice, they translated this goal into a set of computer science disciplines such as computer vision, natural language processing, machine learning, document search, text analysis, and robotic navigation and manipulation.

Over the last generation, each of these disciplines underwent similar arcs of development as computer vision: slow painstaking progress for decades punctuated by rapid growth sometime in the last twenty years resulting in increasingly practical adoption and acculturation. However, as they developed they showed no tendency to become more like McCarthy and Minsky’s vision of AI. Instead they accumulated conventional human and cultural uses. Shaky became the Google Street View car and begat 9-eyes. The semantic web became Twitter and Facebook and begat @dogsdoingthings. Machine learning became Bayesian spam filtering and begat Flarf poetry.

Now, looking back on them as mature disciplines, there’s little to be seen in these fields of their AI parentage. None of them seems to be on the verge of some Singularitarian breakthrough. Each of them is part of an ongoing historical process of technical and cultural co-evolution. Certainly these fields’ cultural and technological development overlap and relate and there’s a growing sense of them as some kind of new cultural zeitgeist, but, as Bruce Sterling has said, AI feels like “a bad metaphor” for them as a whole. While these technologies had their birth in the AI project, the signature themes of AI — “planning”, “general intelligence”, “machine creativity”, etc. — don’t do much to describe the way we experience them in their daily deployment.

What we need now is a new set of mental models and design procedures that address these technologies as they actually exist. We need a way to think of them as real objects that shape our world (in both its social and inanimate components) rather than as incomplete predecessors to some always-receding AI vision.

We should see Shaky (and its cousin, the SAIL cart, shown here) not as the predecessor not to the Terminator but to Google’s self-driving car.

Rather than personifying these seeing-machines, embodying them as big burly Republican governor-types, we should try to imagine how they’ll change our roads both for blind people like Steve Mahan here as well as for all of the street signs, concrete embankments, orange traffic cones, and overpasses out there.

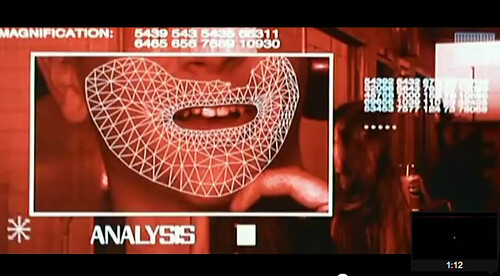

As I’ve written elsewhere I believe that the New Aesthetic is the rumblings of us beginning to do just this: to think through these new technologies outside of their AI framing with a close attention to their impact on other objects as well as ourselves. Projects like Adam Harvey’s CV Dazzle are replacing the AI understanding of computer vision embodied by the Terminator HUD with one based on the actual internal processes of face detection algorithms.

Rather than trying to imagine how computers will eventually think, we’ve started to examine how they currently compute. The “Clink. Clank. Think.” of the famous Time Magazine cover of IBM’s Thomas Watson is becoming “Sensor. Pixel. Print.”