This past weekend, I had the honor of participating in Art && Code 3D, a conference hosted by Golan Levin of the CMU Studio for Creative Inquiry about DIY 3D sensing. It was, much as Matt Jones predicted, “Woodstock for the robot-readable world”. I gave two talks at the conference, but those aren’t what I want to talk about now (I’ll have a post with a report on those shortly). For the week before the start of the actual conference, Golan invited a group of technologists to come work collaboratively on 3D sensing projects in an intensive atmosphere, “conference as laboratory” as he called it. This group included Diederick Huijbers, Elliot Woods, Joel Gethin Lewis, Josh Blake, James George, Kyle McDonald, Matt Mets, Kyle Machulis, Zach Lieberman, Nick Fox-Gieg, and a few others. It was truly a rockstar lineup and they took on a bunch of hard and interesting projects that have been out there in 3D sensing and made all kinds of impressive progress.

One of the projects this group executed was a system for streaming the depth data from the Kinect to the web in real time. This let as many as a thousand people watch some of the conference talks in a 3D interface rendered in their web browser while they were going on. An anaglyphic option was available for those with red-blue glasses.

I was inspired by this truly epic hack to take a shot at an idea I’ve had for awhile now: streaming the skeleton data from the Kinect to the browser. As you can see from the video at the top of this post, today I got that working. I’ll spend the bulk of this post explaining some of the technical details involved, but first I want to talk about why I’m interested in this problem.

![]()

As I’ve learned more and more about the making of Avatar, amongst the many innovations, one struck me most. The majority of the performances for the movie were recorded using a motion capture system. The actors would perform on a nearly empty motion capture stage, just them, the director, and a few technicians. After they had successful takes, the actors left the stage, the motion capture data was edited, and James Cameron, the director, returned. Cameron was then able to play the perfect, edited performances back over-and-over ad infinitum as he chose angles using a tablet device that let him position a virtual camera around the virtual actors. The actors performed without the distractions of a camera on a nearly black box set. The director could work for 18 hours on a single scene without having to worry the actors getting tired or screwing up any takes. The performance of the scene and the rendering of it into shots had been completely decoupled.

I think this decoupling is very promising for future creative filmmaking environments. I can imagine an online collaborative community triangulated between a massively multiplier game, an open source project, and a traditional film crew where some people contribute scripts, some contribute motion capture recorded performances of scenes, others build 3D characters, models, and environments, still others light and frame these for cameras, still others edit and arrange the final result. Together they produce an interlocking network of aesthetic choices and contributions that produce not a single coherent work, but a mesh of creative experiences and outputs. Where current films resemble a giant shrink-wrapped piece of proprietary software, this new world would look more like Github, a constantly shifting graph of contributions and related evolving projects.

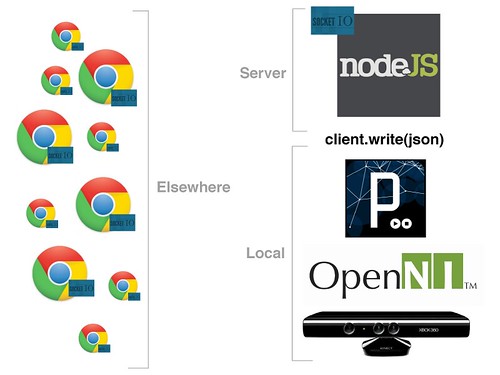

The first step towards this networked participatory filmmaking model is an application that allows remote real time motion capture performance. This hack is a prototype of that application. Here’s a diagram of its architecture:

The source for all of the components is available on Github: Skelestreamer. In explaining the architecture, I’ll start from the Kinect and work my way towards the browser.

Kinect, OpenNI, and Processing

The Processing sketch starts by accessing the Kinect depth image and the OpenNI skeleton data using SimpleOpenNI, the excellent library I’m using throughout my book. The sketch waits for the user to calibrate. When they user has calibrated, it begins capturing the position of each of the user’s 14 joints into a custom class designed for the purpose. The sketch then sticks these objects into a queue, which is consumed by a separate thread. This separate thread takes items out of the queue, serializes them to JSON, and sends them to the server over a persistent socket connection that was created at the time the user was calibrated and we began streaming. This background thread and queue is a hedge against the possibility of latency in the streaming process. Right now as I’ve been running everything on one computer, I haven’t seen any latency, the queue nearly always runs empty. I’m curious to see if this level of throughput will continue once the sketch needs to stream to a remote server rather than simply over localhost.

Note, many people have asked about the postdata library my code uses to POST the JSON to the web server. That was an experimental library that was never properly released. It has been superseded by Rune Madsen’s HTTProcessing library. I’d welcome a pull request that got this repo working with that library.

Node.js and Socket.io

The server’s only job is to accept the stream from the Processing sketch and forward it on to any browsers that connect and ask for the data. In theory I thought this would be a perfect job for Node.js and it turned out I was right. This is my first experience with Node and while I’m not sure I’d want to build a conventional CRUD-y web app in it, it was a joy to work with for this kind of socket plumbing. The Node app has two components: one of them listens on a custom port to accept the streaming JSON data from the Processing sketch. The other component accepts connections on port 80 from browsers. These connections are made using Socket.io. Socket.io is a protocol meant to provide a cross-browser socket API on top of the the rapidly evolving state of adoption of the Web Sockets Spec. It includes both a Node library and a client javascript library, both of which speak the Socket.io protocol transparently, making socket communication between browsers and Node almost embarrassingly easy. Once a browser has connected, Node begins streaming the JSON from Processing to it. Node acts like a simple t-connector in a pipe, taking the stream from one place and splitting it out to many.

Three.js

At this point, we’ve got a real time stream of skeleton data arriving in the browser: 45 floats representing the x-, y-, and z-components of 15 joint vectors arriving 30 times a second. In order to display this data I needed a 3D graphics library for javascript. After the Art && Coders’ success with Three.js, I decided to give it a shot myself. I started from a basic Three.js example and was easily able to modify it to create one sphere for each of the 15 joints. I then used the streaming data arriving from Socket.io to update the position of each sphere as appropriate in the Three.js render function. Pointing the camera at the torso joint brought the skeleton into view and I was off to the races. Three.js is extremely rich and I’ve barely scratched the surface here, but it was relatively straightforward to to build this simple application.

Conclusion

In general I’m skeptical of the browser as a platform for rich graphical applications. I think a lot of the time building these kinds of apps in the browser has mainly novelty appeal, adding levels of abstraction that hurt performance and coding clarity without contributing much to the user experience. However, since I explicitly want to explore the possibilities of collaborative social graphics production and animation, the browser seems a natural platform. That said, I’m also excited to experiment with Unity3D as a potential rich client environment for this idea. There’s ample reason to have a diversity of clients for an application like this where different users will have different levels of engagement, skills, comfort, resources, and roles. The streaming architecture demonstrated here will act as a vital glue binding these diverse clients together.

One next step I’m exploring that should be straightforward is the process of sending the stream of joint positions to CouchDB as they pass through Node on the way to the browser. This will automatically make the app into a recorder as well as streaming server. My good friend Chris Anderson was instrumental in helping me get up and running with Node and has been pointing me in the right direction for this Couch integration.

Interested in these ideas? You can help! I’d especially love to work with someone with advanced Three.js skills who can help me figure out things like model importing and rigging. Let’s put some flesh on those skeletons…

Where do I get the fsm.jar? What about postdata.jar?

You can download the FSM library here: https://github.com/atduskgreg/Processing-FSM/downloads The postdata library isn’t online yet but may be someday.

And how can I try this example if ther isn’t the postdata library??

As now mentioned in the post, use Rune Madsen’s HTTProcessing library.

Hi.

I’m a student, currently attempting to create an application using the kinect. Found your book and then this. I’m searching for the postadata library. Is there anyway we could it? It is for a final year project and not for commercial use.

holy smokes, that is incredible! I’m surprised you don’t have 100 comments by now. Have you been able to work on this any more?

I’d like to help you develop this app further. I’m an artist & a graphics programmer, with low-level 3D abilities, but completely new to Node.js & web-sockets. I’d love to help put skins on those bones!

Hi Jack,

Thanks for the offer! I’d love to take you up on it. The first step would be for you to create a 3D model of a person that’s rigged in a way that corresponds to the Kinect skeleton. Joints for: head, neck, right & left shoulder, right & left elbow, right & left wrist, right & left hip, right & left knee, and right & left ankle.

If you send me that as a collada file I can incorporate it into this and write up how I did it. If you want to talk about this more, my email address is my first name dot my last name at gmail.

Greg,

Sent you email, but haven’t heard from you. In the spam folder, maybe?

Check your inbox for a reply from me, Jack.

Hello, I’ve seen the example and it works great, but there is a couple of things I don’t get it, and it would be great if you could help me.

– You use Java to create the examples, but I don’t know how you can set up the environment to use Java instead of C++ (I have NITE running with C++).

– How can we compile the example to make it work with modifications? I know we need OpenNI, NITE and SimpleOpenNI, but do you use the build button of Eclipse and that’s it or do you need to import new libraries…etc to make it work. If you could explain a little bit about the environment set-up it would be awesome.

Thank you in advance.

Ok, I think I got it.

But I’d like to improve the example and I can’t since I don’t have the postdata library of Processing, could you publish it please, or at least send me the version you were using here?

Thank you very much.

A link to a replacement library, Rune Madsen’s HTTProcessing, is now included in the post.

I implemented something like this but I have the server off site. I’m finding it tough to get it real time.

Very nice work indeed, we are looking in a project to enable social 3D graphics in Social Networking, this seems like a very good start, thanks indeed, I will try to run the examples when I can,

best regards,

Rufael